The Complete Guide to Retrieval-Augmented Generation (RAG) in 2025

Discover how Retrieval-Augmented Generation (RAG) works in 2025. Build intelligent apps using OpenAI or local models like Mistral, with LangChain and FAISS—no fine-tuning required.

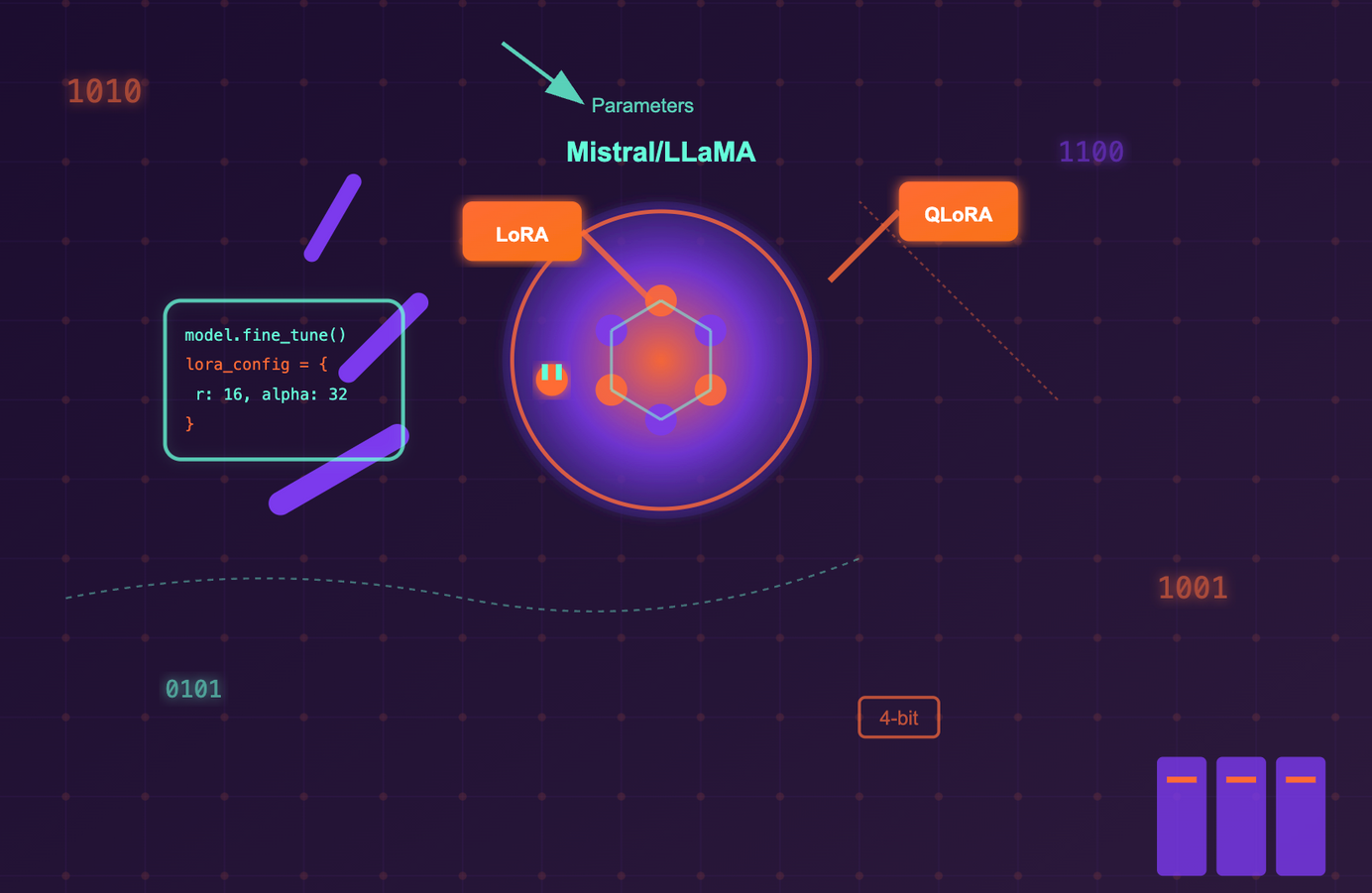

Retrieval-Augmented Generation (RAG) is one of the most exciting innovations in modern AI, especially in the era of Large Language Models (LLMs). It empowers models like GPT, Mistral, and LLaMA to provide more accurate, up-to-date, and context-aware answers by grounding generation on external knowledge sources.

In this guide, we'll break down what RAG is, how it works, when to use it, and how to build your own RAG-based AI system using tools like LangChain, FAISS, Hugging Face, and even open-source LLMs without requiring any paid APIs.

🔍 What is RAG (Retrieval-Augmented Generation)?

RAG is a technique where a language model retrieves relevant context from an external knowledge base (e.g., vector database, documents, PDFs, websites) and then generates a response grounded in that data.

Think of it as giving your LLM a memory boost without retraining it.

🧠 Why Use RAG?

- Accuracy: Helps reduce hallucination by grounding answers in factual data

- Freshness: Avoids relying solely on outdated training data

- Custom Knowledge: Enables chatbots or apps to answer questions from your private or domain-specific data

- Efficiency: Avoids full model fine-tuning

⚙️ How RAG Works — Step by Step

- Query Encoding: User query is converted into a vector using an embedding model (e.g., SentenceTransformers, OpenAI Embeddings).

- Retrieval: Relevant documents are fetched from a vector database using similarity search (e.g., FAISS, Pinecone).

- Contextualization: Retrieved documents are merged and fed into the LLM prompt.

- Generation: The LLM generates an answer based on the query + retrieved context.

🧰 Tools to Build a RAG Pipeline

- LangChain: Orchestrates the retrieval + generation flow with chains, tools, and agents

- FAISS / Weaviate / Pinecone / Chroma: Vector databases to store and search embeddings

- SentenceTransformers / Hugging Face: For generating semantic embeddings

- OpenAI / Mistral / LLaMA / Ollama: LLMs that accept context via prompt

🛠 Building a RAG App — With OpenAI (API Required)

✅ Step 1: Install Required Packages

pip install openai langchain faiss-cpu✅ Step 2: Set Up OpenAI API Key

You’ll need to create an account at https://platform.openai.com/ and generate an API key.

You can set the key via environment variable in your terminal:

export OPENAI_API_KEY="your-api-key"Or in Python:

import os

os.environ["OPENAI_API_KEY"] = "your-api-key"✅ Step 3: Build the Pipeline

from langchain.chains import RetrievalQA

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import FAISS

from langchain.llms import OpenAI

texts = ["LangChain is for LLM orchestration.", "FAISS enables fast vector search."]

embeddings = OpenAIEmbeddings()

db = FAISS.from_texts(texts, embedding=embeddings)

qa_chain = RetrievalQA.from_chain_type(

llm=OpenAI(),

retriever=db.as_retriever()

)

response = qa_chain.run("What is LangChain?")

print(response)🛠 Building a RAG App — With Local Models (No API Key Required)

from langchain.chains import RetrievalQA

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.vectorstores import FAISS

from langchain.llms import Ollama

# Step 1: Create local embeddings

texts = ["LangChain is for LLM orchestration.", "FAISS enables fast vector search."]

embeddings = HuggingFaceEmbeddings(model_name="sentence-transformers/all-MiniLM-L6-v2")

db = FAISS.from_texts(texts, embedding=embeddings)

# Step 2: Setup LLM (local, via Ollama)

llm = Ollama(model="mistral")

# Step 3: Create QA chain

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

retriever=db.as_retriever()

)

response = qa_chain.run("What is LangChain?")

print(response)✅ This version works entirely offline using open-source models like Mistral via Ollama.

📦 Common Use Cases

- AI Assistants with Enterprise Knowledge (e.g., chatbots that answer HR or legal queries)

- Documentation Bots (e.g., searching manuals, API docs)

- Academic Search Engines (e.g., arXiv-powered GPT)

- Legal & Healthcare QA with grounded documents

❗ Challenges and Considerations

- Latency: Two-step retrieval + generation may slow down real-time apps

- Token Limits: LLMs have context windows — long documents must be chunked carefully

- Security: Exposed documents need access controls if the app is public

- Evaluation: Hard to test and measure RAG performance accurately

🚀 Bonus: Scaling RAG with Advanced Techniques

- Hybrid Search: Combine keyword + vector search for better recall

- Chunking Strategies: Use recursive chunking, overlap windows

- Caching: Use Redis or in-memory cache for repeated queries

- Prompt Optimization: Use templates and summarization to stay within token limits

✅ Conclusion

Retrieval-Augmented Generation bridges the gap between static language models and dynamic, real-world information. Whether you're using OpenAI’s GPT or a local model like Mistral, RAG empowers developers to build smarter, safer, and more useful AI applications without needing to fine-tune large models.

Start small, iterate fast, and ground your AI in your data.

AK Newsletter

Join the newsletter to receive the latest updates in your inbox.